One of the most important objectives for the Digital by Default Service Standard project was setting out a consistent way of measuring service performance. Why? Because all too often, there has been no shared understanding of how concepts like ‘customer satisfaction’ or even ‘cost per transaction’ are measured in government - which makes data-driven decision making difficult.

We wanted to create a set of measures that would help service managers to monitor and improve the performance of government services over time. Specifically, service managers need to be able to measure progress in three areas: improving the user’s experience of the service, reducing running costs, and shifting people towards using the digital channel.

The GDS design principles provided us with a starting point. They say that key performance indicators (KPIs) should define measures that are simple, require data that is easily collectable and generate actionable metrics.

We settled on four KPIs that met these requirements:

- Digital take-up: how many transactions are completed online?

- Cost per transaction: how much does it cost to provide each transaction?

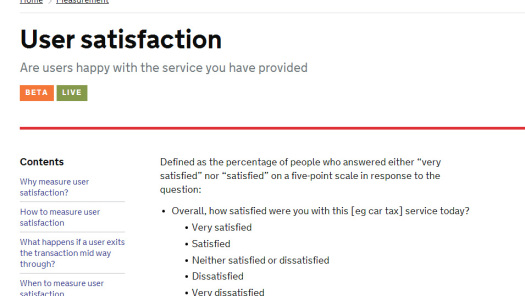

- User satisfaction: how highly do users rate a service?

- Completion rate: how many people start a transaction and then drop out?

The Government Service Design Manual provides more detail about how each of these is defined. To meet the service standard, all new and redesigned services must measure these four KPIs. But in terms of performance measurement, these are only the tip of the iceberg. Service managers will almost certainly want to add other KPIs to measure their more specific requirements.

For each of the KPIs, GDS will work with the teams building the exemplar services (23 transactions picked by departments as priorities for new or redesigned digital services). They'll set sensible goals for ‘what good looks like’, with interim milestones on the way towards meeting them. The goals set for the exemplars will then be applied to other, similar service transformations. After a service goes live on GOV.UK, this performance data will be regularly collected and made publicly available.

We’d really like to hear your views on the KPI guidance we’ve written, so please use the feedback option on the Government Service Design Manual and let us know what you think.

9 comments

Comment by Geoffrey Hollis posted on

How does one score a department that sends out in March 2013 forms urging the reader to access Direct.gov.uk, a site not used for 6 months? Is this the fault of DVLA, the Department concerned, or were they given insufficient notice of the change?

Whoever is responsible, it does not give this consumer much confidence in this brave new world.

Comment by Lara McGregor posted on

My organisation's customers represented 5-10% of Directgov traffic; we're likely to bring the same to gov.uk. Now, when we notice a mistake or identify a content need, instead of publishing the content ourselves via a CMS we have to submit it via an Excel spreadsheet to the department, who forward it to gov.uk for consideration. We have our own web analysts, designers and writers. Why do we have to use gov.uk? At least with Directgov it was a Word document we could email to an editor directly.

Meanwhile our call centre are circulating a Word document of gov.uk pages, seeing as there's no landing page or navigation to support our customer base and the range of content we need to offer.

All in all, you've created a slick design that conceals a content strategy which is even more dysfunctional than Directgov. Remind me, how are you supposed to be saving the government £1.2 billion?

Unless this is all part of a conspiracy to keep the public sector using MS Office documents?

Comment by Tim Manning posted on

I wondered when this issue would break surface. GDS should be viewed and developed as a 'distributed publishing system', based around common standards and infrastructure, not a centralised publishing house. Each department should maintain their own content. Different 'views' should be allowed, departmental, thematic etc.

Comment by Graham Hill (@GrahamHill) posted on

Clifford

My thoughts on why the four measures you list are not enough, why you need to look at work on public value, service-dominant logic and value networks for better measures and why that will help the new Digital by Default Service to do what it is there to do; deliver better digital services for citizens.

The four measures you list are all supplier-driven and output-related measures. None of the measures are citizen-driven nor are they outcome or impact related.

Digital take up is a pure supplier, output measure. Not all citizens are digitally enabled and the measure is easy to game; just make it difficult or impossible use the equivalent non-digital service.

Cost per-transaction is also a pure supplier, output measure. Costs are only a small part of the bigger value picture and again, are easy to game. Just cut back on communication, training and other support mechanisms.

User satisfaction is a nod in the citizen outcome direction but as experience in the private sector shows, it is a difficult construct to measure. There are over 20 different structural models for customer satisfaction in common use.

Completion rate is another pure supplier, output measure. Even with the other measures it tells you little about what the user experience was like for the citizen nor whether there are better alternatives available (but that were not implemented).

The measures are designed to tell you how well YOU think the services operate; but tell you little about how CITIZENS think you are doing on service terms that are important to them.

To be frank, I think you must do much better than this if the digital services are not to fall at the citizen outcomes hurdle.

May I suggest you look at three sources for further information about creating a meaningful scorecard of measures that provide a more rounded view of the digital services you should be providing.

1. Work on PUBLIC VALUE

Extensive work has been done developing public value models that look at the value government ‘adds’ to the public through the services it provides. The public value work identifies the importance of a variety of measures of value including the services provided, fairness in service provision, delivering good outcomes and trust/legitimacy.

Start with the UK Govt Cabinet Office paper on Creating Public Value (available at http://webarchive.nationalarchives.gov.uk/20100416132449/http:/www.cabinetoffice.gov.uk/strategy/seminars/public_value.aspx). There are plenty of other good papers on public value available in the public domain.

2. Work on SERVICE-DOMINANT LOGIC

Extensive work has also been done developing models that evolve services from the supplier-driven, output-related models that dominate today, to more exchange-driven, outcome-related service-dominant logic models that will dominate them tomorrow. Service-dominant logic extends public value thinking by recognising that value is not created when services are DELIVERED to citizens, but in fact CO-CREATED when agencies interact with citizens during service touchpoints. Each party must bring the right knowledge, skills and experience to the interaction to co-create the most value for themselves.

Start with Vargo & Lusch’s seminal 2004 paper on Evolving to a New Dominant Logic for Marketing (available at http://sdlogic.net/JM_Vargo_Lusch_2004.pdf) and their 2008 update Service Dominant Logic: Continuing the Evolution (available at http://www.sdlogic.net/Vargo_and_Lusch_2008_JAMS_Continuing.pdf). There are plenty of other good papers on service dominant logic available at the Service Dominant Logic website.

3. Work on VALUE NETWORKS

Experience working with UK Govt departments redesigning services suggests that there are often many different agencies and agents involved in delivering effective services. Each is looking to co-create whatever it values from the services it provides: whether fulfilling a ministerial mandate, supporting citizens at risk, or getting help from a supportive government and its partners. Extensive work on value networks provides useful tools for identifying the value each agency or agent is looking for, the resources they must provide at critical touchpoints to do so and how value flows through the network of agencies and agents over time.

Start with Elke den Ouden’s work on Designing Added Value (available at http://alexandria.tue.nl/extra2/redes/Ouden2009Eng.pdf). There are plenty of other papers on modelling value networks from Prof. den Ouden, Verna Allee and Prof. Eric Yu available in the public domain.

All the evidence suggests that monitoring, measuring and managing new services is a critical driver of their success. But just measuring supplier-driven, output-related measures will not allow that to happen effectively. By looking at what we already know about public value, service-dominant logic and value networks the new Digital by Default Service has a much higher chance of delivering value the digital services to citizens it was created to do.

Graham Hill

@grahamhill

Comment by Tim Manning posted on

@grahamhill

If I was DGS, I would be inclined (!) to make a distinction between measuring the public value of a given public service and measuring the performance of a given transaction, e.g. how easy it is to submit my tax return, over the public value of people paying their taxes. Not to say that someone shouldn't be looking at this.

For many services, defining customer-driven measures is fairly straightforward. Although as I said, these are not always easily measured, particularly where outcomes occur some way down the road from the point of transaction.

Comment by cliffordsheppard posted on

Thanks to everyone for their comments.

Our KPIs were developed with potential service managers to help them measure and monitor the performance of services over time, and to enable some comparisons between different services over time. Of course, measuring public value is essential too, but that was not our aim with the standard (GDS already measures things like savings elsewhere).

We particularly wanted to identify a core set of metrics that we apply to all services. Some indicators, such as completion times, are less relevant to some services; for example, Lasting Power of Attorney applications require careful consideration at each stage, and speed is not a useful measure of performance. Service managers will be still able to use other measures to help iterate and improve their own services, including some of those suggested on this forum, and we will encourage them to do so.

Comment by Tim Manning posted on

Although this is a good start and I'm all in favour of consistency; this is an area that requires much more robust thinking than seen in the past.

Firstly, I wouldn't make a distinction between digital and other forms of service delivery. Ultimately, it's a continuum anyway - from full digital, through hybrid forms to non-digital. So let's have one standard for the lot.

"They say that key performance indicators (KPIs) should define measures that are simple, require data that is easily collectable and generate actionable metrics."

They may say this, but it is not a good principle. KPIs should measure what matters to the customer, period. These measures may be neither simple, nor be supported by data that is easily collectable. Following the above principle can lead to services that meet the needs of the measures, but not those of the customer.

The obvious example is appointment times. Customers want an appointment typically within a certain time, but on a date/time of their choosing. But more often than not, someone decides that what is important is simply how quickly they get an appointment - which is coincidently rather easier to measure. This can lead to some strange service behaviour, as found in your average GP surgery!

End-to-end transaction times can be very difficult to measure, particularly for hybrid digital/non-digital services. Again, simply measuring the digital component can lead to some strange end-to-end customer experiences, due to the design distortion that it can introduce.

If I was defining some basic principles around KPIs, they would focus on: a simple understanding of the 'theory of variation'; 'service capability' and its measurement; ‘nominal value’ and 'Loss Function'; and behavioural science. The latter is particularly relevant to what you can truly glean from your average user survey. More can be found at: http://design4services.com.

Comment by Jan Ford posted on

I agree with David. The key outcome has to be in terms of value. Also the metrics need to be contextual. Volume could be influenced by factors other than quality of service. Transactions could be completed but are only part of the citizen's end to end process experience. They are good simple measures but without context could result in perverse interpretation. Good stuff but needs a wraparound.

Comment by David Dinsdale posted on

Have used the feedback tool to provide suggestions as requested. In summary, would be good to enhance the feedback to capture economic impact e.g. time and money saved.